You know a tweet is a banger when the replies are split evenly between sheer disgust and fervent praise. Even better when there’s genuine confusion about whether it’s supposed to be serious or not.

Last week one such tweet caught my eye. It is, without a doubt, the pinnacle of the form:

Here is a representative sample of the replies:

- “This is freaking awesome”

- “most stupid person in the world”

- “Holy shit I retweeted this earlier thinking it was irony. Can’t believe this guy is actually serious lol”

- “At some point we just deserve the pandemic to wipe us all out because what the fuck is this”

- “I love this story ❤️”

The wildly divergent reactions to a guy using ChatGPT to create a running habit are hilarious, but also, they point towards an important mystery. This tweet captures it well:

It’s true: advice coming from AI really does seem to hit differently for a lot of people than if the same advice came from a book or a blog. It’s great that this guy was able to use ChatGPT to get into running, but if you look at the actual advice the AI gave him, it’s just the standard advice most people already know: start with tiny steps like putting your running shoes out by the door, go on easy runs to start, and gradually work your way up towards longer runs as you gain strength and stamina. Nothing groundbreaking. And yet it worked!

If you start looking, you see different versions of this story pop up all over the place. People are using ChatGPT to create meal plans, give dating advice, make travel plans, and more. It’s impressive that a computer can do all this, but is the text it generates really that different from what you’d find in books or on the web? The people dunking on the running tweet above would argue the answer is “no,” and therefore people who use ChatGPT or other AI products are just suckers getting caught up in the latest technology fad.

For sure, some of the hype around AI is just temporary. Maybe even the guy behind the running thread did that experiment mainly because he wanted to build an audience—his Twitter bio says “I write a newsletter that provides a weekly summary on AI.” But is the idea that there’s nothing more to AI-generated advice than what you could get in books or websites really a fair assessment? Or is there more to the robo-coach phenomenon than meets the eye?

I think so. At risk of sounding like another vapid member of the AI hype train, I think AI-generated advice represents a huge shift that we have barely begun to feel the consequences of.

Here’s why.

Convenience

To start, let’s consider the importance of convenience.

One of the main reasons people turn to AI-generated advice is simply because it's convenient. We live in a world where information is abundant, but finding the exact answer you're looking for can be time-consuming and overwhelming. You search Google, but get inundated with spammy web pages covered in ads and filled with generic advice. You find relevant books, but it takes hours to get to the point.

AI systems like ChatGPT streamline this process. You can quickly ask a question or seek advice on a particular topic, and receive a fast, coherent response. This level of efficiency and instant gratification should not be underestimated. Time and time again, we’ve seen that people choose convenience over almost anything else. Before Uber you could get a cab, it was just more of a pain. People used to download songs for free but switched to Spotify because it was a more convenient experience. And so on.

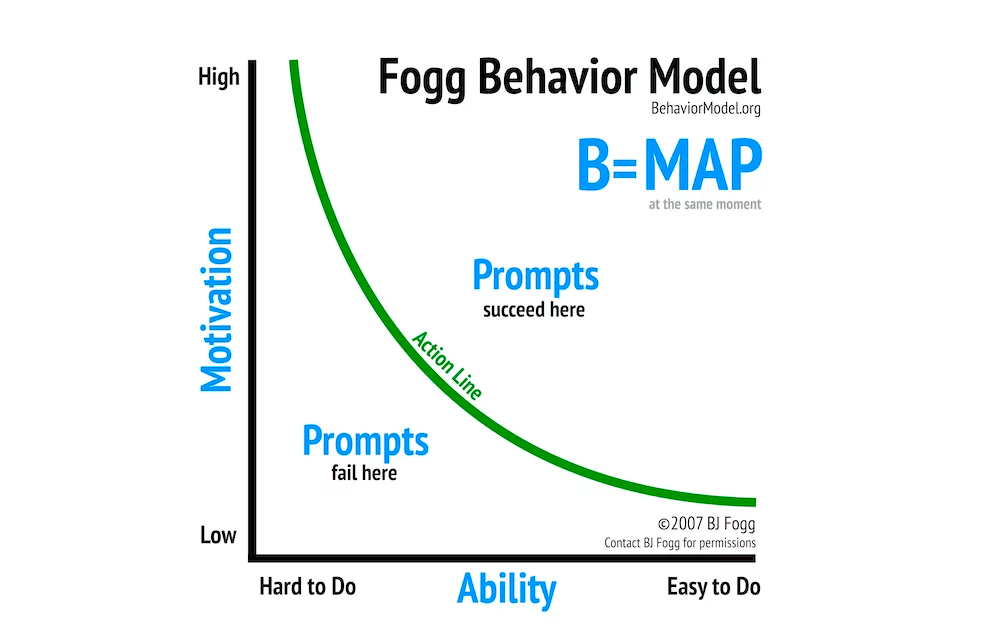

Even if AI-generated advice was basically the same as what you can find in books or Google—and, to be clear, I don’t think this is the case—the ease of access alone would make a huge difference in how people consume and act on that advice. This is for two reasons. First, there are a lot of simple ideas in life that seem obvious, but are hard to remember and apply at the right time (e.g. taking a deep breath to calm down, remembering to be grateful, etc). Even just being reminded of something simple at the right time is valuable. Second, when you make a form of advice (or any other valuable thing) easier to access than alternatives, people will access that advice much more often. The best model I know of to explain this is Stanford Professor BJ Fogg’s behavior model: Behavior = Motivation × Ability × Prompt.

Basically, people perform a behavior if they see a prompt to do it, and their motivation is high enough to overcome whatever level of difficulty is associated with that particular behavior.

AI radically decreases the friction involved in accessing advice, which means hundreds of millions of people are forming new habits and routines around the basic way they navigate the world and move towards their goals. If they used to have a stray thought that didn’t rise to the level of “I should read a book” or “I should hire a coach” they didn’t act on it. Ironically, having to take a big daunting first step like that violates one of the main themes often seen in advice of all sorts: “start small.” Now, with AI, you can. All you have to do is type a question and you’ll get a pretty good bite-sized starting point.

This in and of itself would be radically important, but it’s not even half of the story.

Personalization

AI-generated advice isn’t just an easier to access version of the same advice you can get in books or on webpages. The fundamental experience is totally different: it’s personalized. It takes place in a conversational format where you get just a little information at a time, which allows you to ask follow-up questions and make adjustments on the fly. Most importantly, it gives people a feeling of connection and accountability that is eerily similar to human contact.

This point is so obvious it hardly seems like it’s worth making, but I still don’t think many people fully appreciate the implications of this. It’s like the early days of the web: back then, the general concept that anyone could freely exchange information and transact was obvious, but few could imagine what the world would look like once that simple idea had a few decades to fully play out. The impact of personalized content will be similar: massive implications that take time to play out and are difficult to imagine.

Let’s take the running habit, for example. On the second run some people might feel a bad pain in their shin and wonder if they should stop. Others might get a little blister. And maybe some people will be tempted to go for even longer runs than what their AI coach told them to do, but aren’t sure how far to go. A book will have information for all these circumstances, but navigating to the right page at the right time involves effort. The vast majority of people won’t bother. With AI, getting the right information for your circumstances is as easy as asking.

Also, the conversational nature of AI-generated advice fosters an ongoing dialogue between the user and the AI. This lets users ask follow-up questions, seek clarifications, and receive real-time feedback as they progress towards their goals. It gives the feeling that someone (something?) is paying attention to your progress and rooting for you. The only way to get this feeling before now was by hiring a human coach. Static content can’t replicate this kind of dynamic interaction.

When I’m reading a book now, I often feel a bit frustrated that I can’t ask it questions. It reminds me of how sometimes you see kids try to swipe and tap on a TV screen to interact with it, expecting it to work like an iPad. Once you get used to a new way of doing things, it’s hard to go back.

The consequences of this kind of shift over the long run are huge. What happens to books? What is the best way to teach people things or share new ideas? For the majority of human history, communication happened orally. The written word is a relatively recent invention, but after it emerged, culture was radically transformed. Then there was the printing press, which again reshaped the world by making it economical to mass-produce and distribute texts. The next big step was the internet, and society has hardly begun to digest the consequences, but we all know they are huge. The advent of AI-generated advice has the potential to similarly revolutionize the way we consume and share information. Just as the written word enabled the dissemination of knowledge on an unprecedented scale, AI-generated advice may become the norm, shaping our educational systems and revolutionizing methods of imparting knowledge and expertise.

Improvement

In addition to convenience and personalization, AI-generated advice has a few more major advantages worth mentioning:

- Continuous improvement—AI systems can continuously learn and improve their responses based on user interactions and feedback. The content isn’t just personalized to you, it also benefits from every other user who provides feedback on the system. This means that over time, the quality and relevance of the advice they provide can become even better. The same type of improvement loop is not possible with static text.

- Integration with other technologies—AI-generated advice systems can be easily integrated with other tools, such as productivity apps, wearables, and IoT devices. What if your Physics tutor had access to your test scores over time? Or, imagine if the running guy was able to connect his Apple Fitness or Strava data to ChatGPT? This would be awesome.

- Alignment—at least for now, chatbots are not plastered with ads, and they’re not locked in a desperate competition for clicks in social media or search engines. Any mistakes they make are accidental. This makes them more trustworthy in the eyes of many than the sorts of info-products we are normally inundated with.

Of course, AI advice is not perfect. It gives false information, it can be somewhat bland and boring, it can be biased, and it can’t replace the genuine relationships that form between real human coaches and their trainees. The illusion of human connection is easily broken when the AI makes a mistake or even is just a bit too “robotic” feeling.

But those problems are being worked on furiously by the top minds at AI research labs all over the world, and even today AI-generated advice has meaningful advantages over static forms of text. It’s a mistake to look at the technology as it exists today and extrapolate that years into the future. Instead, we should look at the general improvement curve we’ve seen in the recent past and extrapolate a rough version of that curve into the future. When you look at it this way, it’s hard to argue AI-generated text will get bogged down by these problems.

There will be backlash and mockery, just like there has been with all large shifts. But it’s important to not let snarky tweets cloud our vision for how the future could be better than the past.

Find Out What

Comes Next in Tech.

Start your free trial.

New ideas to help you build the future—in your inbox, every day. Trusted by over 75,000 readers.

SubscribeAlready have an account? Sign in

What's included?

-

Unlimited access to our daily essays by Dan Shipper, Evan Armstrong, and a roster of the best tech writers on the internet

-

Full access to an archive of hundreds of in-depth articles

-

-

Priority access and subscriber-only discounts to courses, events, and more

-

Ad-free experience

-

Access to our Discord community

Comments

Don't have an account? Sign up!

Would be interesting to be able to "load" coaches with knowledge from particular sources & books.

Might be as simple as prompting, but I'd love to see the ability to e.g. have a relationship coach know all of the Gottmans' or Hendricks' work and then coach according to their methods.

You could test out distinct programs this way or even create a curated blend

Or author your own

Nice