Why Building in AI Is Nothing Like Making Conventional Software

Introducing Source Code, your backstage pass to Every Studio

There’s a fundamental loop underneath everything we do at Every: Write -> build -> repeat. Building exposes you to aspects of the world that were previously hidden. Writing helps you to find a precise, concise way of expressing what you know and why. This loop isn’t necessarily linear—sometimes we start with building and move to writing, sometimes we start with writing instead—but it does lead to, we think, a particularly effective way of creating new things.

That’s why, today, we’re launching Source Code, a new column where we bring you into our product studio as we tinker with what comes next. This first piece, by Every entrepreneur in residence Edmar Ferreira, is an incredible articulation of building products in AI, why the key risk of new AI products is feasibility, and how to deal with it through fast experimentation. Sign up to be the first to try our products as an Every Early Adopter.—Dan Shipper

Was this newsletter forwarded to you? Sign up to get it in your inbox.

When I started building my first AI project at Every Studio, I approached it the same way I’d built products in the past: Identify a clear problem, map out a solution, build an MVP (minimum viable product), and iterate from there. It’s a fairly straightforward, software-driven approach: Build fast, test, learn, and improve.

But it didn’t work—so I asked myself: How is building in AI different from building in conventional software?

I joined Every Studio with an ambitious goal: to build nine products in three months—one project every 10 days. My first project, Mindtune, is an AI-driven alternative to traditional adtech and social media algorithms. My hypothesis is that people are fed up with the formulaic, impersonal content in their social media feeds, and that AI offers an opportunity to deliver a more relevant, personalized experience.

I started Mindtune with demand validation because this is where traditional software projects tend to fall apart. You build landing pages, talk to potential customers, analyze competitors—and only then do you invest resources in building out the product. Founders have been following this template for so long that it’s like a reflex. We don’t necessarily stop to ask ourselves, is building this thing even possible?

Building with AI requires us to break our habits and approach building in a different way. AI products bring with them a unique set of risks, and if you don’t understand them, you’re bound to make mistakes.

As I was building Mindtune, I identified three risk profiles that helped me see exactly what kind of risk I was taking on—and, more importantly, what would determine whether it succeeded. I’ll dive in to each of the risks, how they relate to each other, and how AI disrupts the traditional startup “risk chain.” My hope is that founders and builders can save themselves a few wrong turns in the idea maze by better understanding where the risks in their idea lie—and how best to defuse them.

Sponsored by: Every

Tools for a new generation of builders

When you write a lot about AI like we do, it’s hard not to see opportunities. We build tools for our team to become faster and better. When they work well, we bring them to our readers, too. We have a hunch: If you like reading Every, you’ll like what we’ve made.

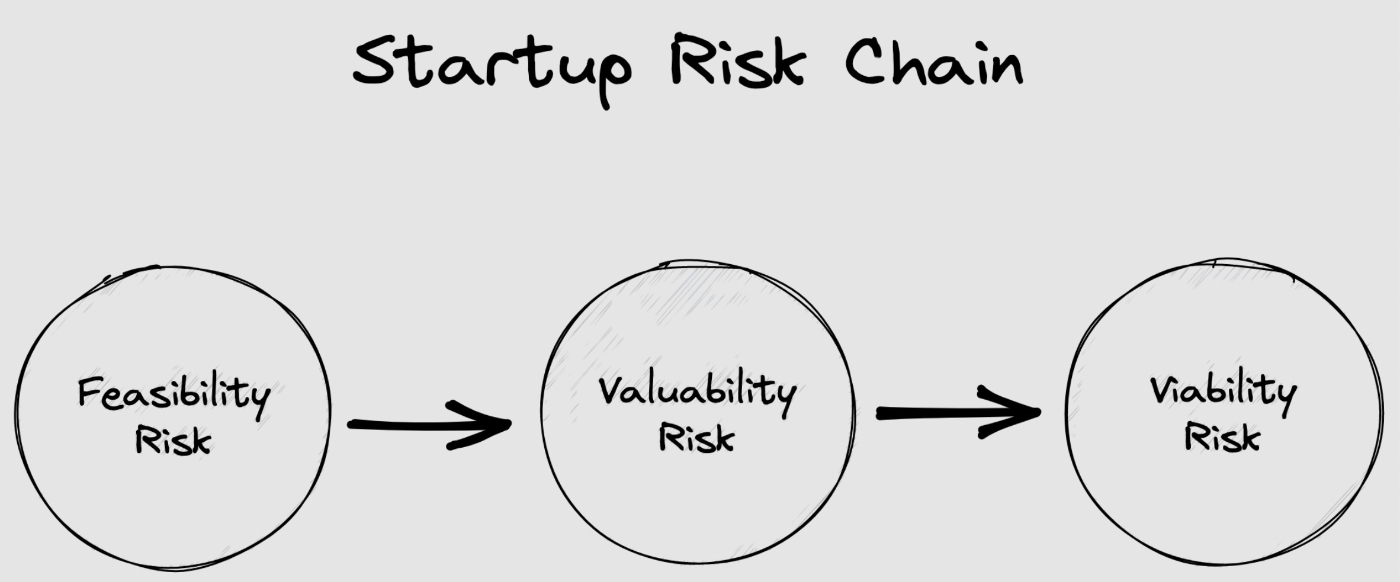

The startup risk chain

There are three types of risks involved in any startup: feasibility, value, and viability.

- Feasibility risk: Can we actually build it? This is the classic engineering challenge. SpaceX, for instance, faced feasibility risk when developing its reusable self-landing rocket.

- Value risk: Can users extract value from it? This is the heart of product-market fit. Airbnb was a great example of value risk—most people initially thought it was a ridiculous idea, assuming no one would ever want to stay in a stranger’s home.

- Viability risk: Can our business extract value from it? Facebook and Google famously struggled with viability risk early on. They knew they had a product people loved, but it took time and experimentation to find a sustainable business model.

The way these three types of risk interact is critical. Think of them as a chain: Feasibility → value → viability. If your product isn’t technically feasible, the other two risks don’t matter. If it’s feasible but not valuable, you’re stuck again. And even if users love your product, you still have to figure out how to make money from it.

Source: Excalidraw/Author’s illustration.

These three risks don’t just arise in sequence; the magnitude of each risk changes depending on the type of product you are building.

In traditional software, feasibility risk is often low. Building the first version of Facebook didn’t involve any groundbreaking technological leaps. Mark Zuckerberg coded it from his Harvard dorm room. The real challenge was in the value and viability risks: Would people use it, and could it become a profitable business?

By contrast, there’s deep tech—projects like gene therapies, fusion reactors, and automated general intelligence that are bringing whole new technologies to market. There’s a clear demand and business model for these kinds of innovations (for instance, a drug for an existing disease), so the value and viability risks are low. The risk is feasibility: Deep tech startups take the risk of building something that they are not 100 percent sure is possible.

I set out thinking that Mindtune would behave much like a software product, with low feasibility risk and greater hurdles at the value and viability stages. But as I learned, AI introduces unique challenges in both feasibility and valuability that demand a new approach.

For one thing, the risk profiles are not the same. There are two main categories of AI startups: what I call deep AI startups and applied AI startups.

- Deep AI startups are building foundational models or hardware, such as Groq’s chip and Figure’s humanoid robot. The biggest risk is feasibility. These companies are often engaged in cutting-edge research, and it’s not always clear if the breakthroughs they’re chasing are even possible. This is high-risk, high-reward territory.

- Applied AI startups like Sparkle and Lex leverage existing models and APIs from companies like OpenAI. The key risk is value. Applied AI companies need to prove that the AI they’re using creates meaningful value in a way that’s better, faster, or more efficient than non-AI solutions. There’s also a feasibility risk: The AI models might not always perform in the way you expect, requiring more thought and refinement to get good outcomes.

Mindtune is an applied AI product: It leverages existing AI models to deliver the desired result of a more personalized social media feed. I was confident that the value was there—that users would welcome a different social media experience—and that the business model had been validated by existing products. But the more I thought about it, the more I realized I had missed an important step: thinking through the feasibility of the technology. I had assumed that, because I could engineer the AI model to deliver a result, that was the same as getting the right result consistently. I underestimated the feasibility risk of building in AI, even applied AI.

AI’s unique feasibility challenge

Traditional software is fundamentally deterministic: The code produces predictable outputs if the logic and parameters are set correctly.

Generative AI has an inherent stochastic dimension: Results aren’t always consistent, and output quality can fluctuate based on the input data and nuances in the model itself. It requires constant testing to determine if the results are reliable and valuable enough for users. As a result, traditional engineering intuition doesn’t fully apply.

Over time, you start to build a sense of what an AI model can or cannot do, but those instincts are less calibrated than they would be with traditional software. Even highly experienced AI engineers encounter unexpected results. The technical feasibility risk is greater than that of traditional software because models can surprise you—both positively and negatively—during testing. But it’s not as daunting as it is in deep tech, where fundamental scientific breakthroughs might be required to move forward. Instead, the risk of generative AI sits somewhere between software and deep tech—feasible but unpredictable.

Because of this unpredictability, working with generative AI requires a much more experimental approach. In traditional software, a well-built first version may need minor tweaks—a change to button placement here, clearer copy there—rather than a complete overhaul. With generative AI, however, that first version might require continuous “tuning”—adjusting prompts, incorporating additional data, tweaking parameters—to improve its reliability and user value. And each adjustment can slightly shift the outcomes, so the cycle of iteration and testing is essential for getting the desired results.

With Mindtune, I started building the software experience—wireframes, logins, etc.—before testing the models (GPT-4o, Claude 3.5 Sonnet, Gemini Pro 1.5, and Llama 3.2) to see if they were capable of generating good enough content for the personal ads. That was a mistake: When I finally did evaluate the quality of the models’ output, they returned inconsistent results. I should have done the latter before I worried about the software components because it’s the quality of the underlying model, not the software that sits on top of it, that ultimately determines the project’s feasibility.

This iterative process also demands an intuitive sense of when to stop or pivot. There’s a delicate balance between pushing a model’s capabilities and recognizing when it’s hit a limit. Sometimes, despite repeated tweaks, the output may never reach an acceptable quality, and you should cut bait. Alternatively, you might sense that a few more iterations could produce the results you’re aiming for.

However, there’s nuance at this stage, too. Sometimes, the lack of feasibility in applied AI is a sign that the project isn’t worth pursuing. At others, you may be confident that the value is there despite the feasibility being low—so you shouldn’t abandon the project, but pivot. You may start out thinking you’re building an applied AI project and realize that what you’re actually building is deep AI, and that in order to make the project feasible, you must go into research mode and build your own model. Your feasibility risk increases, but the project may be even more valuable, and therefore more worth pursuing.

Know your risks, know your path

Whatever you build, you need to understand the risk profile, but it is especially crucial for AI. If you know the nature of the risk you’re taking, you can figure out where to prioritize your resources and energy. It also forces you to ask the right questions at each stage: Can we build this? Will people use it? And only then, once those checkpoints are cleared: Can we build a sustainable business around it?

AI startups, whether applied or deep, operate on a different level of complexity than traditional software products. They require a deeper understanding of how risks interlock and a willingness to navigate uncharted territory. Many developers assume that using generative AI APIs eliminates technical risk—that they’re creating “just another wrapper.” But that’s deceptive. Even when using existing models, there’s a significant level of experimentation involved.

Underestimating this technical risk can lead to wasted time and resources. It’s easy to think that since the heavy lifting is done by the AI model, you can focus solely on market demand and business models. But in reality, ensuring that the AI performs as needed is a substantial part of the challenge. Achieving reliable and valuable outcomes requires more than just hooking up an API; it demands continuous tweaking, testing, and a deep understanding of the model’s behavior. For my next project, I’ll start here.

Thanks to Katie Parrott for editorial support.

Edmar Ferriera is an entrepreneur in residence at Every. He is the founder of EverWrite, an AI SEO and content marketing leader in Latin America. You can follow him on X at @edmarferreira and on Linkedin, and Every on X at @every and on LinkedIn.

We also build AI tools for readers like you. Automate repeat writing with Spiral. Organize files automatically with Sparkle. Write something great with Lex.

Find Out What

Comes Next in Tech.

Start your free trial.

New ideas to help you build the future—in your inbox, every day. Trusted by over 75,000 readers.

SubscribeAlready have an account? Sign in

What's included?

-

Unlimited access to our daily essays by Dan Shipper, Evan Armstrong, and a roster of the best tech writers on the internet

-

Full access to an archive of hundreds of in-depth articles

-

-

Priority access and subscriber-only discounts to courses, events, and more

-

Ad-free experience

-

Access to our Discord community

Comments

Don't have an account? Sign up!

Really great post. Interesting to consider with AI for legal services - part of the feasibility risk is ethical, and it's likely that current regulatory frameworks around unqualified practice are not going to be able to properly capture the real risk profile here.

@JPak this makes a lot of sense and it will become more relevant as the model capability increases.

Very good and timely (for me) outline of key considerations for builders in this new environment! I think for many people the pivot to "deep AI" will be infeasible but that doesn't necessarily mean the project is did. One of the exciting things about the AI revolution-in-progress is how quickly the baseline models improve *without* your input. So one reasonable option, given the pace of foundational model improvement, is simply to set the project aside for even a mere 3-6 months. There is a very real chance it will be viable when you pick it back up again, which is just insane and to my knowledge hasn't really been the case for cutting-edge tech pretty much ever (in terms of how long you'd have to wait for someone else to figure out the core problems you're facing).

There is also the key factor of foundational models being so highly accessible so immediately, which again has largely not been the case with other tech TMK, whether through genuine withholding (not explaining how your tech works, much less open sourcing it), or de facto inaccessibility (the new tech is hard to understand or make use of due to its complexity). AI has the incredible qualities of moving very quickly *and* being something that almost anyone can immediately make use of the moment it is updated. A new foundational model comes out and it's almost immediately exposed via the same or a very similar API (and chat interface) as you've already been using. New features like custom training, Embeddings, etc. take a little time to figure out to be sure, but are still incredibly accessible compared to many "open" but technically challenging platforms and systems of the past (try writing your own IMAP client for example).

But yes, the broader points of the article remain strong. This is just more color and nuance to the playing field.

@Oshyan "So one reasonable option, given the pace of foundational model improvement, is simply to set the project aside for even a mere 3-6 months. There is a very real chance it will be viable when you pick it back up again, which is just insane and to my knowledge hasn't really been the case for cutting-edge tech pretty much ever"

This is an excellent point! I have some projects on my backlog that I revisit from time to time to see if the models have become good enough.

Thanks for writing that, Ed! It's a valuable piece of content and a helpful framework for everyone out there building AI-based products. Keep rocking, writing, and publishing! ;-)

In a few months, I am going to embark on a project to build a knowledge bot in my company to assist the Product Managers. This frame is going to help to approach the project. I would have started with wireframes, like you said. Thanks for writing this.