The Case for Cyborgs

Augmenting human intelligence beyond AI will take us much further than creating something new

January 8, 2024

Sponsored By: rabbit

Get rabbit’s first AI device – r1 –Your pocket companion

Discover the r1, rabbit's first AI device, a pocket companion designed to revolutionize your digital life. With r1, speaking is the new doing – it understands and executes your commands effortlessly.

Experience a streamlined digital life with rabbit's AI-powered OS, learning and adapting to your unique way of interacting with apps.

Ready to transform how you interact with technology? Join the waitlist for the r1's launch on January 9th.

2023 was the year AI went mainstream. The big question in 2024 is: how far can this generation of AI technology go? Can it take us all the way to superintelligence—an AI that exceeds human cognitive ability, beyond what we can understand or control? Or will it plateau?

This is a tricky question to answer. It requires a deep understanding of machine learning, a builder’s sense of optimism, and a realist’s level of skepticism. That’s why we invited Alice Albrecht to write about it for us. Alice is a machine learning researcher with almost a decade of experience and a Ph.D. in cognitive neuroscience from Yale. She is also the founder of re:collect, which aims to augment human intelligence with AI.

Her thesis is that this generation of AI will peter out performance-wise fairly quickly. In order to get something closer to superintelligence, we need a different approach: the augmentation, rather than replacement, of human intelligence. In other words, we need cyborgs.

I found this piece illuminating and well-argued. I hope you do, too. —Dan

In the year-plus since the world was introduced to Chat-GPT, even your Luddite friends and relatives have started talking about the power of artificial intelligence (AI). Almost overnight, the question of whether we will soon have an AI beyond what we can understand or control—a superintelligence (SI) that exceeds human cognitive ability across many domains—has become top of mind for the tech industry, public policy, and geopolitics.

I’ve spent much of my career straddling human cognition, cognitive neuroscience, and machine learning. In my Ph.D. and postdoctoral work, I studied how our minds compute the vast amounts of information we process on a second-by-second basis to navigate our environments. As a data scientist, machine learning researcher, and founder of re:collect, I’ve focused on what we can learn about humans from the data they generate while using technology, as well as how we can use that knowledge to augment their abilities—or, at the very least, make their interactions with technology more bearable.

Something is coming out tomorrow that will fundamentally change the way humans and machines interact: it's called the rabbit r1:

The r1, powered by the innovative rabbit OS, is your gateway to a more intuitive digital experience. It's not just an AI device; it's a smart companion that understands your commands in natural language. Don't miss out on getting a glimpse from the future.

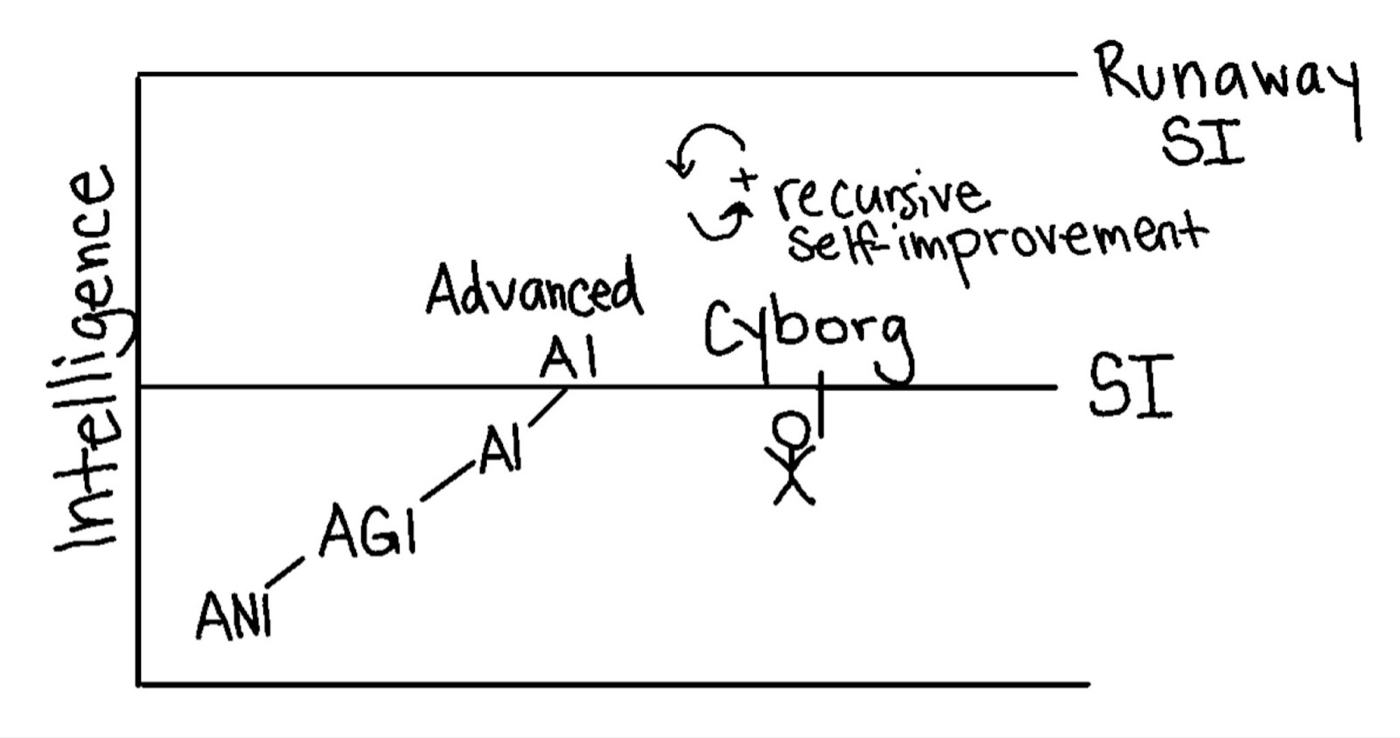

Even with this experience, I know that it can be daunting to understand all the terms about AI that people throw around. Here’s a simple explainer: generally speaking, the goal of AI is to create an artificial intelligence that matches human-level intelligence, while SI is an intelligence beyond what even the most intelligent human is capable of. Sometimes, SI is imagined as a machine that outpaces human intelligence. But it can also be a human that’s been augmented—or even a group of humans working collectively, like a team at a startup.

The fear that SI may become a “runaway intelligence,” like the Singularity Ray Kurzweil has written so much about, comes from the theory that these intelligent systems might discover how to create even more intelligent systems—which could lead to machines that we can’t control, and that may not share our cultural or moral norms.

Different pathways to superintelligence. Source: the author.

But we can’t plan for runaway SI yet. We still haven’t reached the first step—creating a machine that matches human intelligence, typically measured by the Turing Test benchmark. This first step is a big one—replicating human intelligence in machines has proven to be a much harder task than some early computer scientists anticipated.

Up until about four years ago, we had reached artificial narrow intelligence (ANI), which could be used to accomplish specific tasks—like image recognition—as well as or better than humans. With ANI, it wasn’t possible to generalize to a broader range of potentially novel situations or tasks—an ability that would make it artificial general intelligence (AGI). And depending on whom you ask, large language models (LLMs) like GPT-4, which powers ChatGPT, have allowed for us to create an AGI.

However, moving beyond AGI to SI is a different challenge. Thus far, LLMs have tended to improve with more compute and access to larger-scale training data, there’s no guarantee this trend will continue. In fact, I believe we will soon plateau.

If that happens, I propose an obvious but somewhat overlooked path to SI: cyborgs, or a closer integration between humans and machines that can augment our already amazing intelligence. In this article, I’ll lay out my case for cyborgs as a path to SI and how I believe we can get there.

The necessary ingredients for SI

Let’s start by defining what’s needed to get to SI.

SI can’t simply score a few IQ points higher than the most intelligent human on an IQ test. It must be able to learn. Beyond that, it will have to “learn to learn.” It must be able to collect and seek out the right information to make itself more intelligent. This goes beyond finding facts to answering previously unsolved questions. For instance, it could mean that an SI must determine why intelligent beings exist in the first place.

An SI must validate whether it’s making the correct decisions, and learn by experiencing the world. It must reason about what’s happening in the present and make good predictions about what could happen in the future (and update its prior assumptions appropriately). To plan actions, navigate, and learn from the world, an SI would need to have goals and a physical form—meaning that it would most likely be embodied, in a form that could perceive its environment and take action on it.

Finally, an SI would need to be able to innovate. This is possibly the hardest criterion for AI—out of all the human tasks at which it excels, it fares the worst at innovation. For now, at least, innovation is a uniquely human ability—one that would be critical to a system that could recursively imagine and create better versions of itself.

Why it’s important to start with humans

Humans have an evolutionary pressure to survive. Over thousands of years, we have evolved to learn from our environments quickly. We’re also the most advanced intelligence that we know of and can use our creativity to imagine and build tools. We are the ideal foundation for SI.

Why, then, does it feel like so much of the conversation is focused on artificial intelligence that automates or replaces what we do, rather than augments our abilities? One theory is that capitalist pressures have outweighed the most acute evolutionary ones, and the lure of creating the same goods with fewer humans is a strong one. But I think it’s also because humans are messy—we don't fully understand how our biological bodies give rise to our minds and consciousness. That makes us a potentially risky foundation for SI.

We’re not just bits of intelligence bouncing through the world. We have complicated emotions, and nuanced and unspoken social hierarchies. We act irrationally and pursue goals that may not be in our best interests. We don’t exist within the clean confines of an operating system or a laboratory—we act and react in a highly variable world. Creating a machine that can score well on one-off benchmarks doesn’t mean it can read—or care about—morals or social cues. But missing these aspects of human behavior puts AI at a disadvantage, especially as we move beyond the chat box and into the real world. No number of YouTube videos can replace what one learns from physical exploration and social interactions.

Furthermore, when met with different types of contextual information, an SI must be able to understand what’s important and what should be ignored. Motivations and goals guide learning. But our current AIs lack intrinsic motivations—and will likely continue to lack them, even if engineering efforts advance. If an SI can only learn when a human intentionally feeds it information, it’s likely not more intelligent than the human it got that data from.

Finally, if we use a human-first approach, we will have to worry less about the problem of AI alignment and a malevolent runaway intelligence that doesn’t share our values.

Enter cyborgs

For years, when I have told people what my work is, their reaction has been: “Oh no, you’re building the Terminator!”

I’m not. But they aren’t completely off-base, either.

A cyborg is a living being that has integrated with technology to restore a function, produce a function that exists in others but is damaged or missing in them, or augment existing abilities. Based on this definition, cyborgs already exist. If someone uses a hearing aid, they can hear until they remove the device. If you create a grocery list on your smartphone and pull it up when you get to the store, you’re augmenting your flawed memory. We’ve already taken the next step—by attaching ourselves to a more general AI like ChatGPT, we are upgrading our cognitive abilities more widely than if we narrowly augmented a single sense or ability.

And if we can fill the gaps we have as humans and start to augment our creative abilities, we’ll be one step closer to SI. In many ways, human babies, and certainly verbal toddlers, are more capable than our current AI models. They are the product of a genetic code that’s evolved over millennia to be well-suited to operate in our natural world. Humans are imbued with the innate ability to learn language and seek out human connection, but they aren’t born with an understanding of their environments—instead, they are powerful learners. This ability to learn allows us to flourish wherever we may be. In part, the human race is incredibly resilient because we never lose our ability to learn and change.

If we decide to start with humans as the foundation, we’ll have a few gaps to fill before we’re ready for SI. The first is capacity constraints—our heads can’t start much larger than they already do, and we require sufficient calories and rest to sustain life. Our brains have lots of very clever ways to deal with these constraints, like using attention and memory to reduce the amount of information we need to process to a reasonable size. However, even with our tools, we have a limit on how much information we can work with. We have hit an evolutionary ceiling and have to find ways to augment ourselves.

A possible next step is to build a better bridge between humans and the capabilities AI and better sensors can unlock. We might integrate biometric data from a wearable like an Apple watch and use it to build highly personalized AI algorithms that are aware of physical and psychological contexts. This bridge would act like an extended mind that expands human capabilities, rather than like a replacement or an ill-fitting prosthetic.

The caveat is that some humans can currently be augmented. Consider, for example, ChatGPT or neuroprosthetics like cochlear implants. However, uneven pricing and access mean that these tools are not evenly distributed. Several countries, including Russia, China and Italy, have already banned ChatGPT, and most organizations can’t afford the computational resources to train huge models. As we develop technologies to build better cyborgs, we’ll have to make sure they are cheap and widely available enough to avoid furthering divides. If we tailor AI algorithms to individuals and bring hardware into our lives in non-exploitative ways (no retinal implants to serve ads, please!), we can benefit from the myriad of human differences, all amplified. We can even use the combinatorial power of collective super-intelligent humans to create something much greater than ourselves.

Alice Albrecht is the founder and CEO of re:collect, a startup building tools that use AI to enhance human intelligence. She has more than 10 years of experience leading and advising teams building AI/ML-powered products. She holds a Ph.D. in cognitive neuroscience from Yale University and did her postdoc at UC Berkeley.

Find Out What

Comes Next in Tech.

Start your free trial.

New ideas to help you build the future—in your inbox, every day. Trusted by over 75,000 readers.

SubscribeAlready have an account? Sign in

What's included?

-

Unlimited access to our daily essays by Dan Shipper, Evan Armstrong, and a roster of the best tech writers on the internet

-

Full access to an archive of hundreds of in-depth articles

-

-

Priority access and subscriber-only discounts to courses, events, and more

-

Ad-free experience

-

Access to our Discord community

Thanks to our Sponsor: rabbit

rabbit r1 is more than a device; it's a testament to rabbit’s commitment to redefining the human-digital interface and it is launching tomorrow. Embrace the future of technology with rabbit OS, intuitively understanding and acting on your behalf. Stay ahead of the curve and be among the first to experience the r1.

Comments

Don't have an account? Sign up!