Sponsored By: Composer

Wall Street legend Jim Simons has generated 66 percent returns annually for over 30 years. His secret? Algorithmic trading.

With Composer, you can create algorithmic trading strategies that automatically trade for you (no coding required).

- Build the strategy using AI, a no-code editor, or use one of 1,000-plus community strategies

- Test the performance

- Invest in a click

Every technology is described by the same words when it comes to market: faster, cheaper, smarter. With the release of Claude 3—an AI model comparable to GPT-4—its creator, Anthropic, said the typical stuff. The company published evaluations that showed its newest model was on par or slightly more powerful than its peers. It discussed how its models could be run more cheaply.

All of this is cool, but the differences in benchmark testing or price weren’t so drastic as to warrant an essay from this column.

The correct word, the unusual one, the word that Anthropic didn’t use and I feel is most accurate, is something weird.

The right way to describe Claude3 is warm.

It is the most human-feeling, creative, and naturalistic AI that I have ever interacted with. I’m aware that this is not a scientific metric. But frankly, we don’t have the right tests to understand this dimension of AI. Shoot, we might not even have the right language.

Our team at Every includes some of the few people on the planet that have access to OpenAI’s latest public GPT models, Google’s newest Gemini 1.5 models, and Anthropic’s Claude 3. We’ve also been using LLMs for years—testing, evaluating, scrutinizing, and inventing new ways to work.

In all that labor, we’ve never found an AI able to act as a robust, independent writing companion that can take on large portions of the creative burden—until now. Claude 3 seems to have finally done it. Anthropic’s team has made an AI that, when paired with a smart writer, results in a dramatically better creative product.

This has profound implications. Great writing isn’t just about the prose, or about commas or sentences. Instead, great writing is deep thought made enjoyable. Until Claude 3, most AI models were good thought made presentable. Claude 3 frequently crosses the great/enjoyable rubicon.

To determine that I had it work on the hardest pitch of all time: convincing someone to follow Jesus.

Unlock the power of algorithmic trading with Composer. Our platform is designed for both novices and seasoned traders, offering tools to create automatic trading strategies without any coding knowledge.

With more than $1 billion in trading volume already, Composer is the platform of choice. Create your own trading strategies using AI or choose from over 1,000 community-shared strategies. Test their performance and invest with a single click.

Jesus, automated

Every Monday at 2 p.m. ET the entire brood of my in-laws jumps on a Facebook Messenger call. The purpose of our weekly chat is to connect with my youngest brother-in-law, Gage, who is on a Mormon mission to Brazil. We tell him about how his favorite sports teams are doing, he tells us about who he is teaching about Jesus that week. Many of us on the call donned the necktie-and-white-shirt combo and served missions ourselves, so we share pointers on what worked for us.

This Monday, I had an idea. Rather than rely on our experience for guidance, we could summon the collective wisdom of mankind—i.e., we could ask the LLMs for advice on missionary work. So I pulled up Claude 3, GPT-4, and Gemini 1.5, and prompted them with a question about how to help people learning about the Church understand the long list of rules that members are asked to follow.

First, I asked GPT-4 to help my brother-in-law teach in a more compelling way. It outputted a long list of generic advice that felt cold.

Source: Screenshot by the author.(This went on for another 11 bullets.) Then I tried Gemini. The result was similarly impersonal writing, with comparable substance—and it took an extra 20 seconds.

Source: Screenshot by the author.Finally I tried Claude 3. It was remarkably gentle, kind, and specific. I was astonished.

Source: Screenshot by the author.While this test was interesting, it wasn’t possible to draw general conclusions from it. The more compelling example was when we deployed it in our own products at work.

Editor, automated

Last week, my essays flopped. This is an issue, because, ya know, I get paid to write. I felt that one of my pieces—about Reddit’s ad business—had strong ideas, but it didn’t land the way I wanted. For my own edification, over the weekend, I rewrote it so that the introduction was tighter and the thesis statement was obvious, and it was much better.

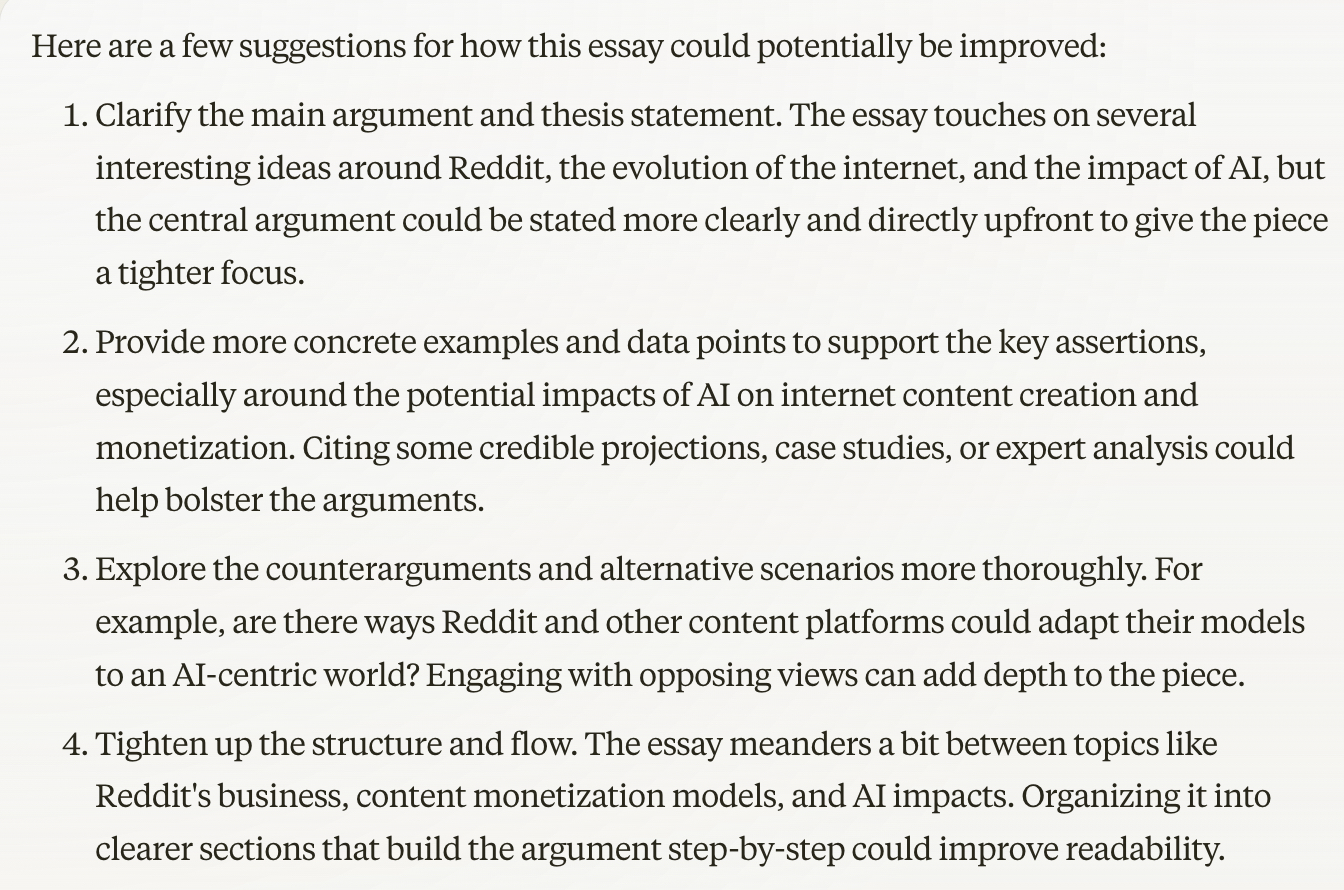

I uploaded the original draft to Claude 3 and asked how it would improve it.

Source: Screenshot by the author.

These suggestions are identical to the changes that I made to the piece. Then I asked Claude to rewrite the introduction to incorporate these fixes—and it made the same edits I did. It cut the meandering anecdote, shortened up a few sentences, and introduced a thesis statement. More importantly, it did so while matching my style and tone of voice. It wasn’t exactly how I would do it, but it was 90 percent of the way there.

I have wanted to find an AI tool to provide editorial support for years, but tools like GPT-4 were never good enough. Now, I don’t think I can write without Claude again.

There are some technical complications

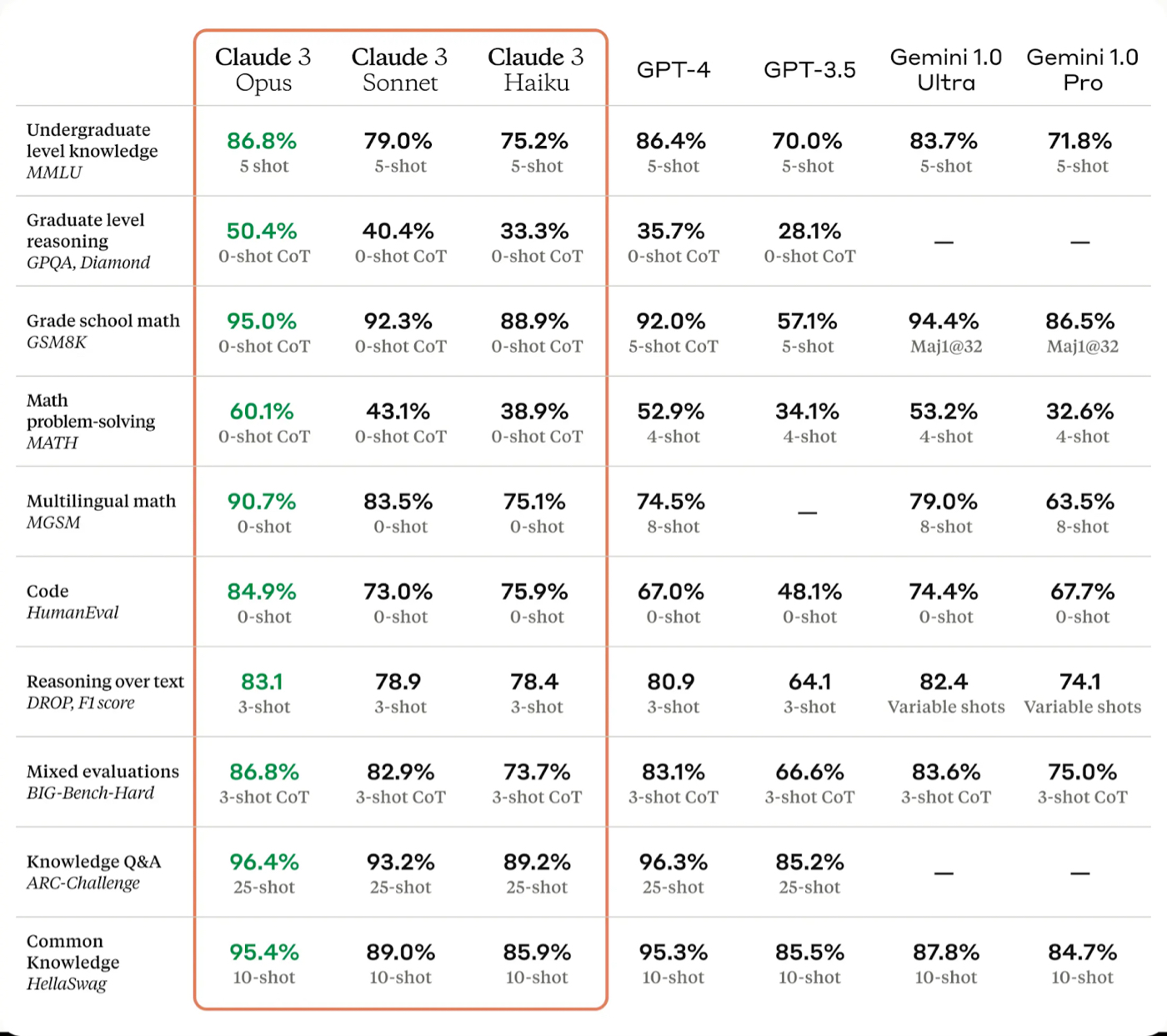

With the launch, Anthropic published a post that included this table, showing Claude 3 outperforming every other option.

Source: Anthropic.In that same post, there was a footnote. It turned out that the testing was in comparison to GPT-4 from March 2023. OpenAI’s GPT4 “Turbo” line of models, announced eight months later, outperformed Claude 3 on the benchmark tests they shared in common. So Claude 3 didn’t really outperform the current model of GPT-4.

That said, none of this matters. For most of these benchmarks, there are only a few percentage points of difference in either direction; sometimes Claude is better, sometimes it’s worse. And none of the tests captured the nuance and warmth that I’ve experienced—because there is no test that can fully measure this. If the benchmarks don’t accurately describe the model's capabilities, it’s a challenging market for everyone involved to sell and buy AI tech.

It seems that there is some soul in the machine, some blind men and the elephant type of parable that we are falling prey to. We’re interacting with this alien other, trying our best—and failing—to describe it. On X, founders and researchers have resorted to publishing their own evaluations to crowdsource an understanding about the strengths of Claude 3. But even then, these efforts will likely fall flat.

Andrej Karpathy, a co-founder of OpenAI who is no longer at the company, reinforced my concerns about the lack of generalizable applicability of benchmarks. He wrote on X: “People should be *extremely* careful with evaluation comparisons, not only because the evals themselves are worse than you think, but also because many of them are getting overfit in undefined ways, and also because the comparisons made are frankly misleading.”

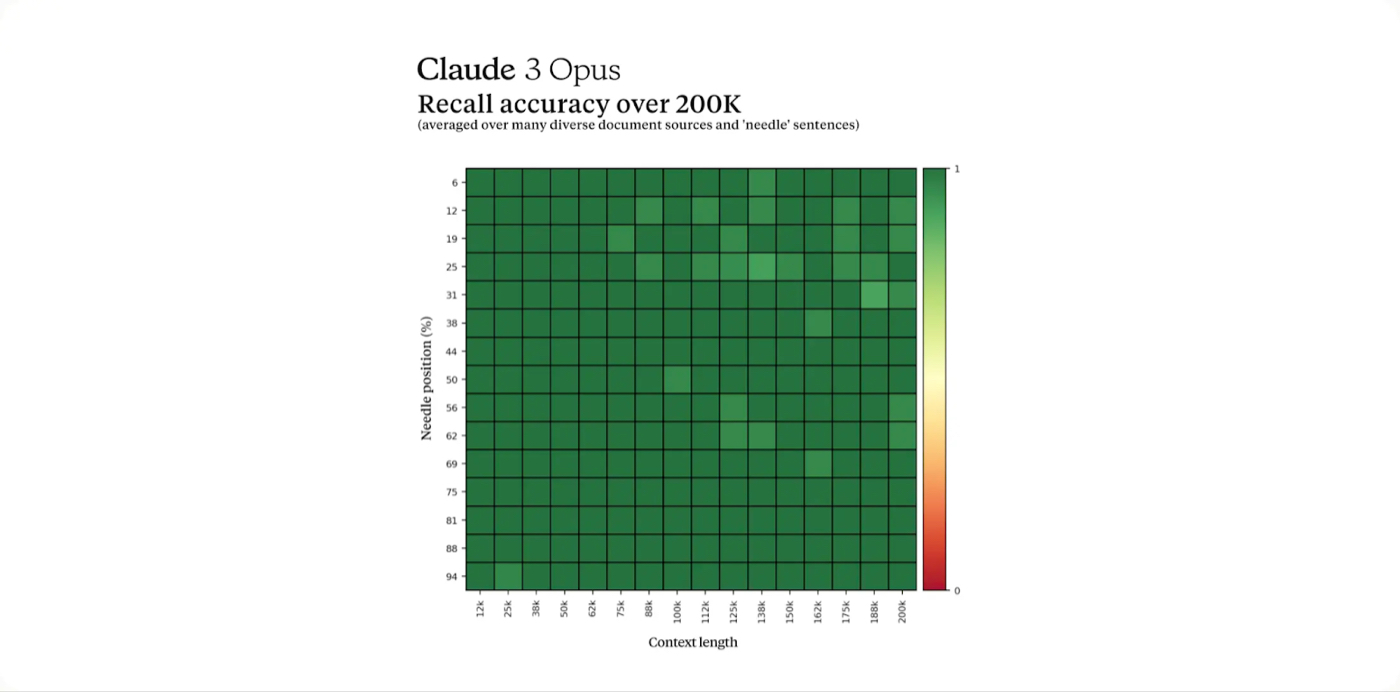

One of Claude 3’s more notable improvements is its 1 million token context window, which operates with an astonishing 99 percent accuracy. This significant upgrade expands the range of workflows and applications possible with AI models, effectively matching the capabilities of Google's latest release.

Source: Anthropic.Perhaps one of the most intriguing aspects of Claude 3's development is its partial reliance on synthetic data as compared to relying on data scraped from the internet. In my conversations with AI researchers, they hypothesized that Anthropic likely used an LLM to generate training data internally. This would be a groundbreaking solution to the data-scaling problem that has long plagued AI development. By reducing the need for organic data and demonstrating the feasibility of inorganically created datasets, Claude 3 paves the way for a future of significantly diminished concerns about "running out of data." Content companies like Reddit that were relying on AI data licensing revenue should also be worried. There is no need to pay them when LLMs can generate training data for you.

So, if benchmarks don’t capture differentiation like Claude’s warmth, and competitors are able to replicate other advances like synthetic data or context windows, the vector of competition shifts elsewhere. When products can’t easily differentiate, sales can.

How AI companies plan to win

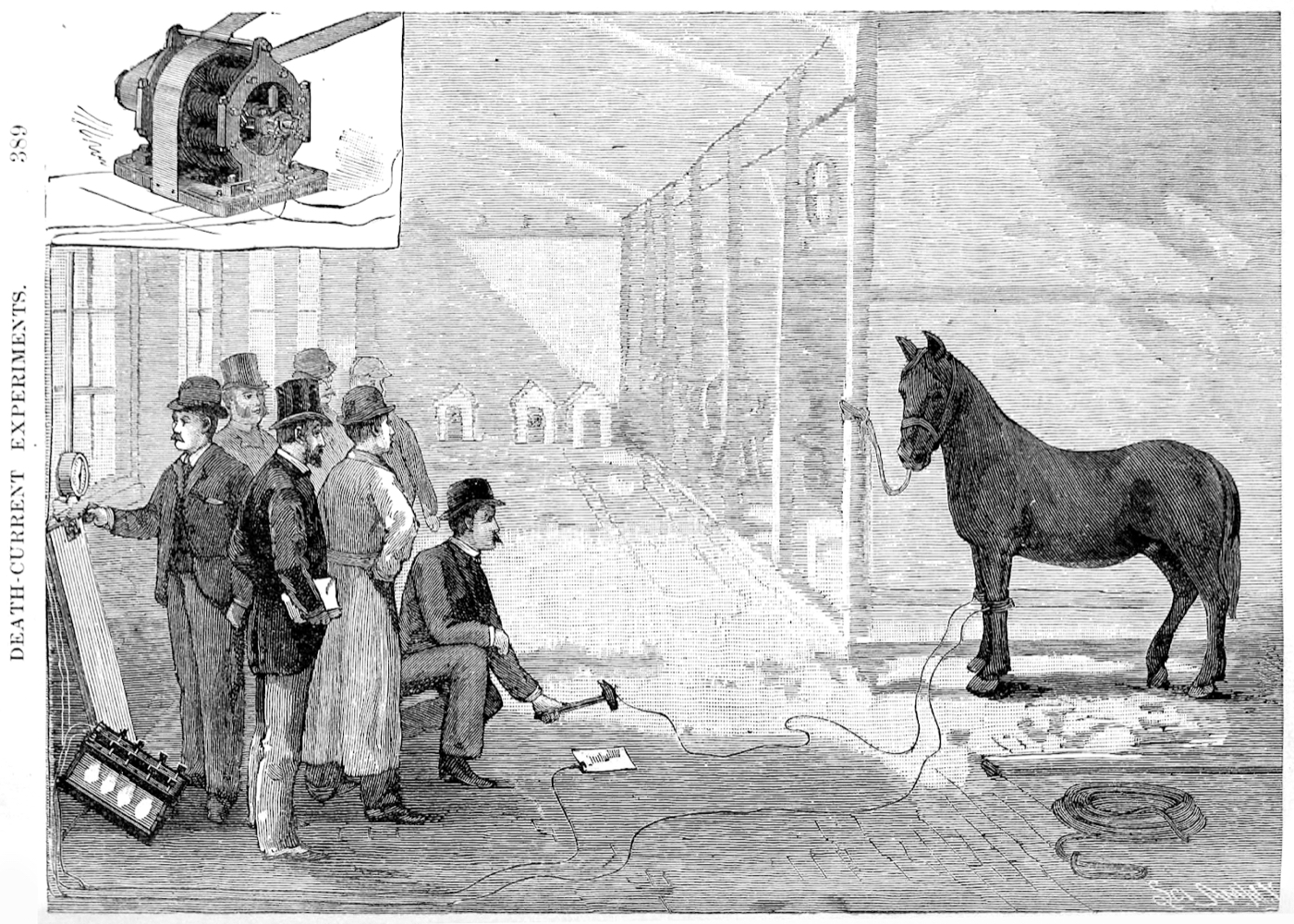

On December 5, 1888, Harold Brown electrocuted a horse.

Source: Fordham University.Brown committed this act of cruelty in the name of safety. He was using it to argue that Thomas Edison’s direct current (DC) electricity technology was far safer than rival Nikola Tesla’s alternating current (AC) because it used less current. To make his point, he used AC to kill several animals. The irony was that at one point Tesla was Edison’s apprentice, and there is a world where their labs worked together. This “safety” demonstration for the press was a farce, a play to gain market share. In the end, these so-called safety concerns didn’t even matter. The electricity market share was won neither by Tesla or Edison, but by savvy financiers who used that technology to build regional monopolies. In deployment, both AC and DC were used for particular jobs to be done.

This story has eerie parallels to that of Anthropic. The company was started in 2021 when ex-OpenAI VPs and siblings Dario Amodei (CEO) and Daniela Amodei (president) felt that OpenAI wasn’t taking AI safety seriously enough. Since that split, OpenAI and Anthropic have battled over model capabilities, sales, and partnerships. Safety, just like in the days of the “current wars” between Edison and Tesla, has been reduced to a marketing point.

In 2017, AI pioneer Andrew Ng argued that “AI is the new electricity” because it would power everything (a view I generally agree with). AI is also the new electricity because it is following the same market development of old electricity. The technical differences between competitors are hard to understand, the companies themselves may end up as low-margin utilities, and value will accrue to founders with a superior go-to-market strategy.

On this last front, Claude 3 came with a litany of announcements.

Distribution: The mid-tier performance mode of Claude 3 is publicly available through Amazon Web Services and in private preview on Google Cloud—signifying that we have moved past the demo phase and are fully in the deployment phase of this technology cycle, as I argued last week. Cool demos can garner small contracts as people try out the technology, but large sales depend on an established salesforce. OpenAI relies mostly on Microsoft as a distribution partner; Anthropic is uneasy bedfellows with Google and Amazon for similar purposes.

Pricing: Depending on the use case, Claude 3 is about a third of the cost of OpenAI’s comparable models on a per-token basis. But again, it is very challenging to compare the two. Each model has its strengths that are not represented in the pricing, and it is unclear whether this pricing difference is driven by technical innovation, brand power, or investor subsidy. We need a better sense for how much it costs to run these models compared to the costs required to make them to know if prices will continue to trend downward.

Power: Eighteen months ago, in a pitch deck to investors, Anthropic promised a model 10 times more powerful than that of GPT-4 in 18 months. Claude 3 is roughly in line with GPT-4. Where is that model? Why hasn’t it been released yet? If Anthropic is able to deliver on that promise, it would be a world-changing product. An LLM 10 times more powerful than Claude 3 is hard to even wrap your head around. That Anthropic didn’t deliver that, 11 months into its 18-month timeline, should make investors examine their assumptions on model improvement.

In many ways, Claude raised more questions than it answers. Can Anthropic build the model it promised? Can we figure out a way to better test LLMs? Can Anthropic’s Amazon and Google partnerships keep the company competitive in the race against OpenAI? Can synthetic data allow for infinitely more powerful models?

We don’t know and can’t know for now. In the meantime, this product is a genuine breakthrough for users, and I’m excited to see what people build with it.

Evan Armstrong is the lead writer for Every, where he writes the Napkin Math column. You can follow him on X at @itsurboyevan and on LinkedIn, and Every on X at @every and on LinkedIn.

Find Out What

Comes Next in Tech.

Start your free trial.

New ideas to help you build the future—in your inbox, every day. Trusted by over 75,000 readers.

SubscribeAlready have an account? Sign in

What's included?

-

Unlimited access to our daily essays by Dan Shipper, Evan Armstrong, and a roster of the best tech writers on the internet

-

Full access to an archive of hundreds of in-depth articles

-

-

Priority access and subscriber-only discounts to courses, events, and more

-

Ad-free experience

-

Access to our Discord community

Thanks to our Sponsor: Composer

Composer democratizes the complex world of algorithmic trading, offering tools that empower you to create, test, and invest easily in different strategies. With a community-driven approach and over $1 billion in trading volume, Composer is the go-to partner in financial investing. Whether you're new to trading or looking to elevate your game, Composer provides the platform and support you need to succeed.

Comments

Don't have an account? Sign up!

Good read regarding Claude 3: https://twitter.com/hahahahohohe/status/1765088860592394250

Biggest takeaway for me was ‘Platforms like Redit that were relying on AI data licensing revenue should also be worried. There is no need to pay them when LLMs can generate training data for you.’

Try explaining synthetic data to folks that are just beginning to understand how the heck these models work.

Everyday I have ‘a moment’ with this stuff.

Super read and one I’ll be sharing with colleagues and friends.

"To determine that I had it work on the hardest pitch of all time: convincing someone to follow Jesus." Curious why you have this bias. Christianity has fared quite well for the ppast couple thousand years.

Thought, insightful. Thank you. I've come to similar conclusions with my use of Claude 3. How fascinating it is to think what comes next.

How does it compare to Pi? Curious because I’ve felt this “warmth” and EQ from day one with Inflection AI’s product and curious whether you’re testing it out alongside these LLM competitors. Thanks!