Sponsored By: Reflect

This essay is brought to you by Reflect, an ultra-fast notes app with an AI assistant built in directly. Simplify your note-taking with Reflect's advanced features, like custom prompts, voice transcription, and the ability to chat with your notes effortlessly. Elevate your productivity and organization with Reflect.

This is the second in a five-part series I’m writing about redefining human creativity in the age of AI. Read my first piece about language models as text compressors, and my prequel about how LLMs work.

Was this newsletter forwarded to you? Sign up to get it in your inbox.

You can’t get energy for free. It can be neither created nor destroyed, just moved around.

That's more or less what computers were able to do with text on their own for a long time. Barring a disk failure, text was always conserved, often moved around, sometimes crudely transformed.

But they almost never created it. Other than doing a spell check, if you were seeing text on a computer, it was probably because some human, somewhere, had typed it.

Language models changed this entirely.

Now, you and I can type a few sentences into ChatGPT and watch it expand, character by character, line by line, into something new—composed out of thin air, just for you. Language models take your text and stretch it into a different shape, like glass heated and blown through a tube.

What had previously been an inert collection of bits—a line of characters extending across a screen—is now something different, something potentially alive. When you feed a piece of text to a language model, the text is like an acorn turning into a tree. The acorn itself contains instructions for the tree it will become, and the language model becomes rich dirt, water, and warm summer sun.

In short, language models are free energy for text. Let’s talk about how we can use that function for creative purposes.

Transform the way you take notes with Reflect, the ultra-fast notes app with a baked-in AI assistant. Whether you're jotting down ideas or organizing detailed information, Reflect's custom prompts and voice transcription make the process a breeze. You can even chat with your notes, making your workflow more dynamic and efficient. Reflect adapts to your needs, offering unparalleled convenience. Elevate your productivity today.

A world where every question contains an answer

Language models create a world where every question can be expanded into an answer.

This is critical for creativity because asking questions is a uniquely human thing to do.

Apes, for example, share many human characteristics, including their social nature and a proto-culture that allows them to pass some of what they learn on to their offspring. But they are different from humans in at least one important respect: Apes don’t ask questions.

Question-asking creates room for answers, and answers create room for more questions. This is the first step to getting new ideas and building new things.

Over the centuries, if an answer to a question exists, it’s become increasingly findable for the questioner. Books can answer questions so long as you have the wherewithal to search through them. Google brought us even closer: Many of our most common questions became just a search query away.

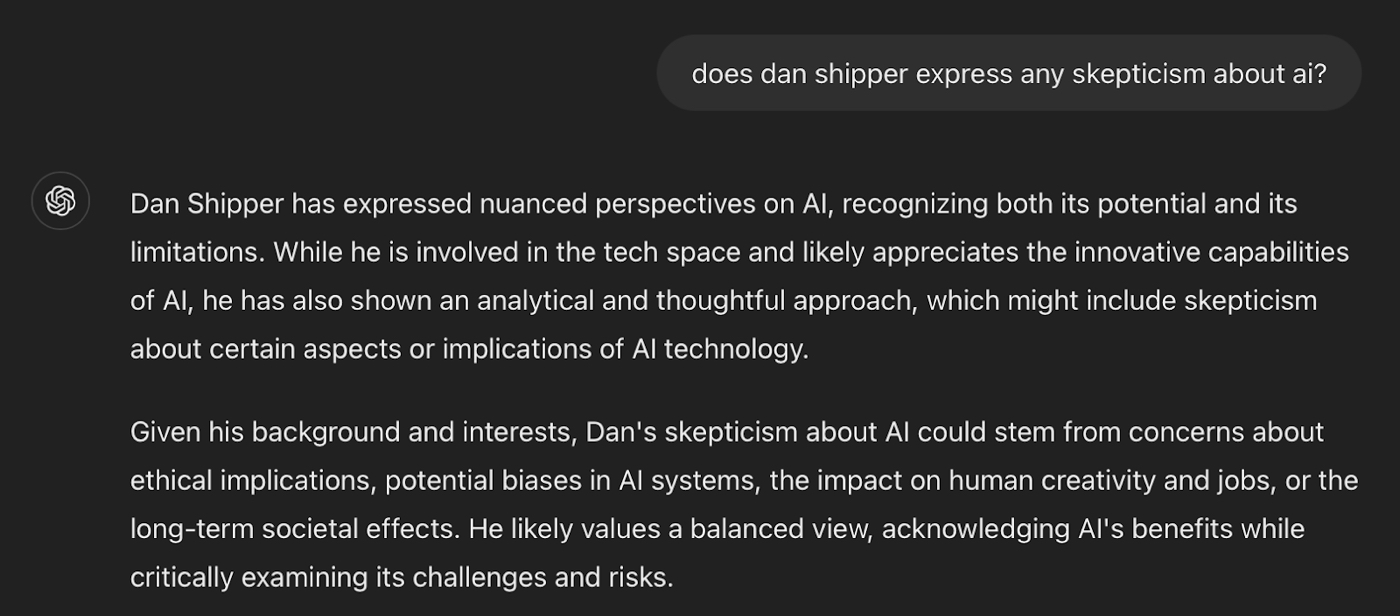

But there are certain of humanity’s answers that have heretofore remained stubbornly unGoogleable. Google only has answers to questions that have already been asked and answered before. For example, try Googling “does Dan Shipper express any skepticism about AI?” I bet you’ll have a difficult time finding a succinct answer.

Language models love questions like this, though:

All screenshots courtesy of the author.They expand any question into an answer. Because language models always predict what comes next in a sequence, the question itself points to the beginning of its answer.

Anyone who spends time around children and listens to the ceaseless questions they ask will know why this is so important. In the past, a question implied a quest to find an answer. Today, questions are answers already—all they need is expansion through language models.

Let’s talk about some of the most useful kinds of expansions.

Language models as comprehensive expanders

If you want to get a comprehensive understanding of any broad domain of human knowledge, language models can help. Comprehensive expansions from language models are a lot like your own personal Wikipedia written in real time about whatever topic you care most about.

“Tell me about the history of kings in the Roman Empire.”

“What do I do if I find a tick on my arm?”

“What are the top strategies for pricing negotiations with enterprise customers?”

Comprehensive expansions are high-level basic explainers, not modified for any specific intended audience, but pitched to everyone.

These questions could be answered with varying levels of speed and quality prior to ChatGPT, but, I would argue, ChatGPT and other AI models are far better resources. First, because they’re fast. And second, because you can ask follow-up questions.

Which gets us to the next kind of expansion: contextual expansions.

Language models as contextual expanders

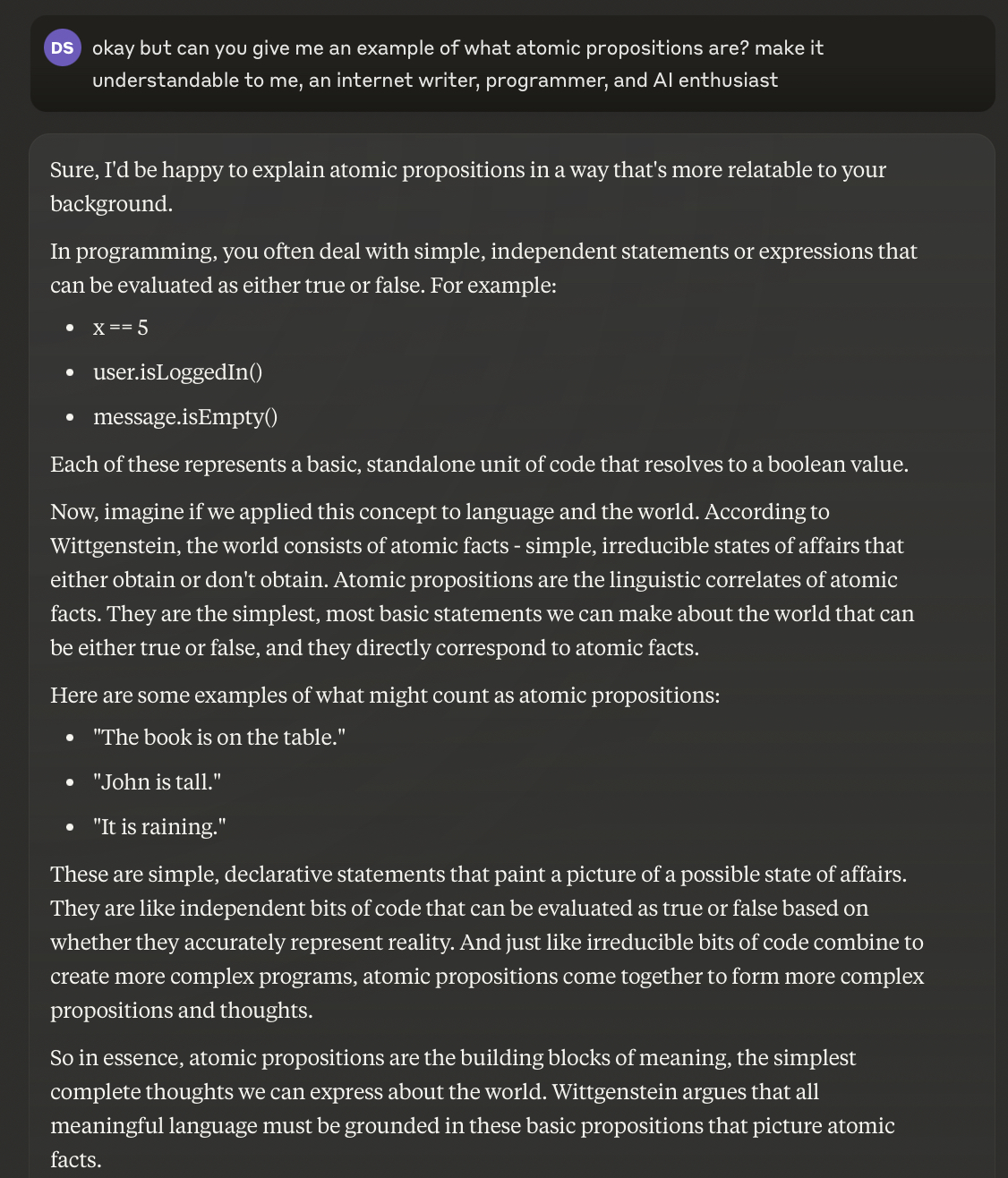

Information becomes knowledge when it is fitted into a particular context. ChatGPT excels at expanding a question into an answer that is most relevant for a specific amount of attention, background knowledge, sensibility, and circumstance.

A large part of creative work is about consuming the raw input you need in order to do your work. Often this includes reading primary sources that are difficult to understand. But AI can expand a specific question about a source into an article written just for you.

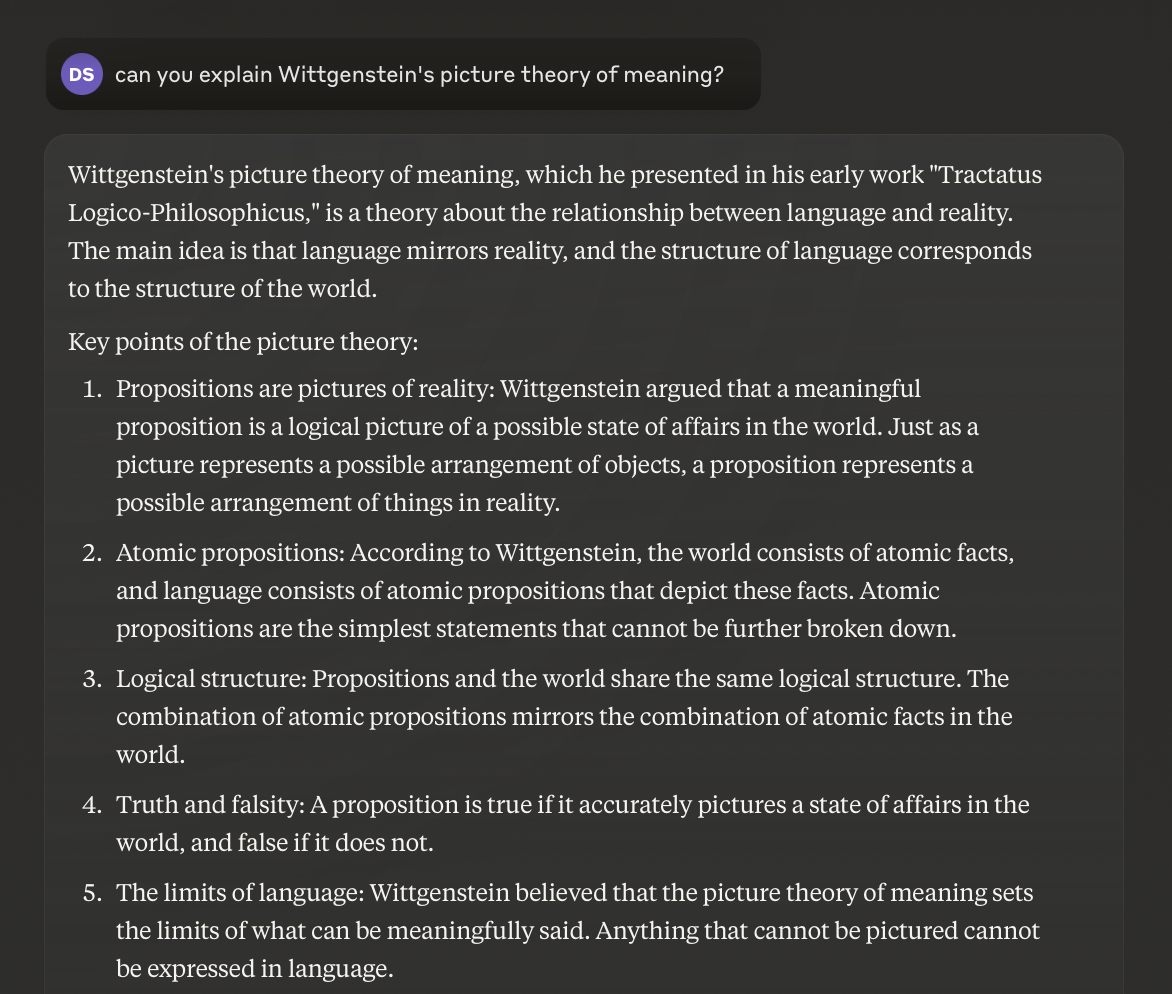

I actually used Claude in this way in order to prepare for my interview with LinkedIn cofounder Reid Hoffman. I asked it to explain one of the main points of Austrian philosopher Ludwig Wittgenstein’s philosophy of language classic Tractatus Logico Philosophicus:

This is a good, comprehensive expansion of my question. But it’s high-level and abstract. If I want something more tuned to who I am, I can ask a follow-up, like:You could answer this question yourself by Googling, but AI brings the best of what you need to know to bear all at once in the form that’s right for you. (If you didn’t find Claude’s explanation useful, you can always ask it to rephrase the answer in a way that clicks with your brain instead of mine.)This is more important than it might seem.

When I was in third grade, I had designs on writing a novel, so I wanted to learn creative writing. There was only one problem: My mom couldn’t find any creative writing classes for kids my age. They didn’t exist, except for older kids. And my parents didn’t know enough themselves to teach me.

There’s an incredible amount of power in being able to expand a question like, “How can I level-up my creative skills? I’m 9 years old,” into an age-appropriate answer for kids.

This doesn’t just apply to factual expansions. AI is also quite good at creative expansions.

Language models as creative expanders

When I’m looking for a metaphor or a simile in my writing, the first thing I do is go to ChatGPT or Claude and ask it to output 20 of them.

For example, in my piece about about Robert Sapolsky’s free-will book Determined, I wrote:

“I fucking love Robert Sapolsky, a Stanford neuroscientist whose books on biological behavior and stress, Why Zebras Don’t Get Ulcers, A Primate’s Memoir, and Behave, are some of the best science writing I’ve ever read. He is, in my opinion, the poet laureate of neurobiology. He is at once rigorous and humanistic, sardonic and compassionate, literary and scientific.

So you must be able to imagine my conflicting feelings upon learning that he wrote a new book (yay!) about free will (yuck!) called Determined. It’s like Scott Alexander writing a 10,000-word essay on the correct way to hang toilet paper. It’s like Annie Dillard writing a new book on whether or not a hot dog is, in fact, a sandwich. It’s like Bill Simmons writing a trilogy about whether Die Hard is really a Christmas movie. (Okay, okay, I’d read that.)”

Guess where those similes came from? An entertaining 20-minute-long session with Claude, during which it helped me both identify what I was feeling about Sapolsky’s book and find a few great similes to express it clearly.

Creative expansions are the truly generative part of generative AI. They make it easy to traverse a space of possibilities.

You can go one word at a time, like generating a simile. But you can also create entire stories from a simple prompt. Many of the parents I know are already using ChatGPT on voice mode to create custom stories for their children, weaving in characters from pop culture with their kids’ lives and interests to create stories that are made just for them.

The ability to cheaply explore creative possibilities is one of the most important uses of language models for creative work. It’s not entirely effortless on our part: We do have to put our taste to work—ask for what we’re looking for, and recognize it when we get it. But it does a lot of the legwork for us.

It’s easier for humans to edit than it is to generate, and we don’t always have the energy to filter through all of the possibilities we might want to in order to find the precise word, the brightest idea, or the best plot twist. Creative expansions help us map the possibility space so we can bring the best of what we find back to our work.

Compressing and expanding

Many of the expansion operations I wrote about earlier in this piece serve a similar purpose as the compression operations I wrote about previously. For example, you can answer a question through expansion or contraction. Which should you pick, and why?

Contraction is like squeezing a lemon into lemon juice. At the end of the squeeze, what’s in your cup is likely to only contain what was in the lemon.

Expansion is more unpredictable. Returning to the acorn metaphor: In general, an acorn is going to produce a tree. But the characteristics of that tree will largely depend on the circumstances of the acorn’s growth. There’s a much higher degree of freedom in terms of the final product with an acorn than a squeezed lemon.

Language model compression is more likely to return a factual response based on the prompt you give it, assuming that answer is found in the text you are compressing. When I built my Huberman Lab chatbot, I inputted the transcripts of his episodes into ChatGPT in each prompt because they keep the model grounded in what he actually said. (This is a common technique called Retrieval-Augmented Generation, or RAG.)

By comparison, language model expansion is more creative and poetic. It’ll do a good job of responding factually to common questions. But it shines when it’s asked to go off the beaten path and find previously unexplored possibilities.

When I was trying to find similes for my Robert Sapolsky book review, I didn’t load his entire book into ChatGPT. I just tried to express, briefly, what I was feeling: “I’m writing a review of Robert Sapolsky’s book about free will, and I want to find a few similes to express it. I feel bored and excited about it at the same time. Generate 20.”

I let the language model do the rest. After it returned its first 20, I gave it more instructions and had it generate more.

It wasn’t about answering a precise question about his book. It was about exploring possibilities.

The new world of text expansion

In a world where computers can expand text, inside of every question hides an answer, and every story is written for you. Text expansion is creative and somewhat unreliable, but that’s what makes it exciting. It is lighting a rocket and watching it shoot into the sky. It is dropping a penny off a bridge and peering over the railing to see what happens.

We create conditions and then step back to watch the show. It’s an undeniably powerful part of any creative arsenal.

So, go forth and expand!

We’ll be back next week with the third part of this series: translation.

Dan Shipper is the cofounder and CEO of Every, where he writes the Chain of Thought column and hosts the podcast AI & I. You can follow him on X at @danshipper and on LinkedIn, and Every on X at @every and on LinkedIn.

Find Out What

Comes Next in Tech.

Start your free trial.

New ideas to help you build the future—in your inbox, every day. Trusted by over 75,000 readers.

SubscribeAlready have an account? Sign in

What's included?

-

Unlimited access to our daily essays by Dan Shipper, Evan Armstrong, and a roster of the best tech writers on the internet

-

Full access to an archive of hundreds of in-depth articles

-

-

Priority access and subscriber-only discounts to courses, events, and more

-

Ad-free experience

-

Access to our Discord community

Thanks to our Sponsor: Reflect

Thanks again to our sponsor Reflect, an ultra-fast notes app with an AI assistant built directly in. With features like custom prompts, voice transcription, and effortless note-chatting, Reflect redefines note-taking. Stay organized and boost your productivity.

Comments

Don't have an account? Sign up!

Great post Dan, thanks for sharing some of your writing processes!

Hi Dan, greatly enjoyed reading Part One "Compressors" and this Part Two "Expansion" and was looking forward to Part Three "Translation" + four & five, but couldn't find them anywhere.

Did you get around to write them & am I looking in the wrong places?

Many thanks in advance