Sponsored By: Viable

This essay is brought to you by Viable, the Generative Analysis platform that redefines data-driven decision-making. Say goodbye to manual data crunching and hello to lightning-fast, AI-powered insights from your unstructured data.

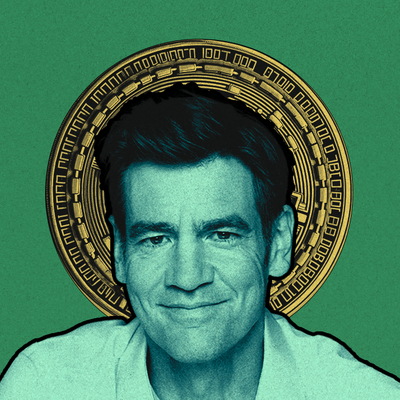

The first thing I asked Replit founder, Amjad Masad, is whether he’d still want his kids to learn to code.

Amjad is both a long-time programmer, and the founder of Replit—the newest AI unicorn. They're a web-based development environment that announced this week that they raised almost $100m dollars at a $1.6B valuation from a16z and a host of other top investors. They’ve built a Github CoPilot competitor, called GhostWriter, and part of their mission is to give every programmer an AI team member that can write code for them.

So, I wanted to know, does programming still matter?

He told me that he believes that the leverage on programming skills has gone way up in the last year. He thinks that the current generation of AI tools are inherently augmenting tools, not automating tools. They might change the bundle of skills you need to be a programmer—you might act more like a guide or a manager in many instances than writing code yourself. But he believes that programming skills actually become more important in an AI-driven world. So yes, he still wants his kids to learn to code.

In other words: even though he could—and even though maybe he should—Amjad isn’t blindly banging the AI drum.

He actually struck me as one of the few people in the space who has the right level of excitement. Replit has been prototyping AI tools for the last 3-4 years, long before they were popular. So Replit isn’t a Johnny-come-lately entrant into this space. Instead he’s someone who believes deeply in the long term potential of the technology, but can also acknowledge its significant present limitations. He does a good job of not swinging between poles of mania or doom, and I left the interview feeling grounded, and smarter.

We covered his thoughts on two big topics: the future of programming, and how startups can win against incumbents in the AI race. The second is something Amjad knows a lot about too. Replit’s going up against Microsoft in a quest to own how you build software with AI. And so, they’re facing the biggest question every single one of today’s startups has to face: how do you build and win with an AI product against a better funded incumbent that also has access to AI?

Amjad has a plan. Read on to learn more.

Stop manually crunching data 🛑 Leverage AI to generate lightning-fast, powerful insights from your qualitative data.

Viable combines the power of GPT-4 with our cutting-edge analysis technology to distill your data into crucial themes based on churn risk, customer tier, and more. It's like having a team of data scientists at your fingertips, helping you focus on what truly matters.

Join industry leaders like Uber, Circle, and AngelList who trust Viable to boost NPS, slash support ticket volumes, and cut operating costs. Experience the magic of Viable's AI-driven insights and save hundreds of hours each year.

Start your journey with Viable today and unlock the full potential of your qualitative data. Empower your business with the ultimate time-saving, growth-driving solution.

Amjad introduces himself

Hi, I’m Amjad. I'm originally from Jordan—born and raised there. I came to the US in 2012 to work at Codeacademy in New York. I was a founding engineer there. I actually built one of the first open source in-browser coding sandboxes while I was there, so that's the predecessor to Replit. I was obsessed with this idea of making programming easier to get started with and more fun. I wanted to make hacking easy, so you can have an idea and build it fast, instead of needing to plan a lot and set up a big development environment. It's been my obsession over my career to just make programming more fun and accessible.

I worked at Codeacademy in New York for a while, then joined Facebook. At Facebook I worked on the React team and then was one of the founding engineers on React Native, and built the entire JavaScript runtime and compiler tool chain. I brought the same ideas to React: fast feedback loops, fast updates, and refresh. I wanted you to be able to work on it as if you're working in a sandbox. In 2016, I went back to browser-based coding and started Replit as a company with my wife Haya, who's our head of design now. And we pursued the same mission: just make programming more fun and accessible.

The importance of learning to code in the AI era

Learning how to code becomes more important in a world with AI.

AI means that the return on investment from learning to code just went way up. AI models are great at generating code, but they go off the rails easily. They’re inherently statistical and stochastic, so they make a lot of mistakes. That will get better asymptotically, but for the foreseeable future, they’ll need human input.

That means in this new world, you can suddenly build incredible things by leveraging these tools to generate code. And, if you can program, you can understand where they’re getting things wrong and fill in the gaps.

You become less of a traditional programmer and more of a guide to bring your ideas to life. These are inherently augmenting technologies, not automating technologies.

Another way to say this is that these models are really good as copilots. And I think that will create deflationary pressure in the environment where individual companies may need fewer programmers. So it will have a significant impact on productivity from that perspective.

That doesn’t mean that we’ll need fewer programmers overall though. I think we’re far away from automating programming completely, even though autonomous agents are popular right now. The problem with autonomous agents is that the errors they make compound. Each time the LLM gets called there’s a certain amount of errors, and by the time they get to the 10th loop on something they’re very likely to have gone off the rails.

The whole trend in software development over the last 70 years is towards more predictability in terms of output. And so using autonomous agents where there’s inherent unpredictability seems unlikely to me. They’re just not smart enough for that right now.

I think this is something that’s missed in the larger conversation around AI.

AI doom is bullshit

We are now in a vicious cycle of hype and fear where the incentives of the players involved make the cycle extremely bipolar.

On the one hand AI labs are incentivized to oversell their models’ capabilities. Take Microsoft for example—they put out a paper titled “First Contact With AGI” talking about GPT-4 (which they later renamed “Sparks of AGI”). Of course, that’s not science, it’s content-marketing. But when people read that and predictably freak out about it, Microsoft goes and says, “Oh no, you’re overreacting.”

On the other hand you have people who built their entire identity on freaking out about AGI, and the louder they freak out the more attention and funding they attract. So they’re incentivized to also overstate the capabilities. Then the labs benefit indirectly from the fear caused by doomers because they can raise more money on bigger valuations, and attract talent that feel like they’re working on existential problems. And that makes doomers freak out more. So there’s a perverse incentive to hype this technology and make it seem like superintelligence is just around the corner. The reality is, we’re very far from that.

GPT-2 to GPT-3 was the birth of a new technology. But GPT-3 to GPT-4 is an incremental jump. GPT-4 has less hallucination, it’s more predictable, it has a bigger context window—but it’s just technical incremental improvement. We’re really hitting diminishing returns on the current architecture of these models.

If you just look at the way these models are today, you’ll see that they’re going to have a very difficult time impacting the physical world enough to end humanity. Sure, if you connect GPT-4 to the US nuclear arsenal it could cause massive damage, but no one is going to do that. Even if you postulate the intelligence of these models greatly advancing, they’re still going to run into physical limits.

Mastering tasks in the real world requires considerable trial and error. While doomsday scenarios often depict AI as an omnipotent entity, such a notion inherently creates issues. However, if we consider AI as an extremely intelligent being, it will still face challenges with simple tasks, like lifting a cup of coffee. Over time, it can learn to accomplish this.Even then, there are a lot of real-world scenarios that are inherently computationally intractable that AIs will run into trouble trying to control. People miss that.

One of the great tragedies of modern times is that we inherited this world, we didn’t build it. People of the last century, who lived through the industrial revolution, knew how hard it was to build things in the physical world. But we’ve mostly lived in a world that was built for us, so we forget how hard it is to do.

We understand how to build the virtual world, and it’s possible AI will get very good at that. But the physical world is a different story. I think forgetting that distinction leads to all sorts of thinking about being able to dominate the world and the physical space that is not realistic because we don't really have an intuition for how hard it is.

People were way undershooting on the potential of AI for a long time, and now they’re way overshooting. There’s a bipolar nature to markets like this. It’s something you have to come to terms with if you want to build companies.

Overall though, it’s super exciting to see so much progress. Especially in open source. You want to focus on the builders, and put the hype or the doom aside. That’s what’s most exciting.

How startups can use bundling and vertical integration to win in AI

We were fairly early to AI-powered development. We built a lot of GPT-2 and GPT-3 based code generation and code explanation experiments back in 2020. And then we productized that as GhostWriter last year, which is our CoPilot-like AI assistant—but it also has an AI chat and can do things in the programming environment, understands your projects, understands your output and more.

I’ve been in the space for a long time, so I have a few thoughts on how startups and startup builders can win in AI.

The inherent benefit of Replit is that we bundle and vertically integrate the entire toolchain and lifecycle of software development. We help our users go from idea, to an end product that you can see and share with friends, to a deployment that you can share with the world. We’re even building in tools to help developers monetize what they build.

So we’re taking people from idea to dollar. We want to bring the distance between those two things to as close to zero as possible.

Most development tools are not built this way. If you’re VS Code, you can’t do this. You have to support every use case under the sun. You have to support every idiosyncratic way that your users are used to doing things, so you can’t bundle and vertically integrate.

But our approach allows us to see the entire lifecycle of software development, which gives us a fundamental advantage. For example, Ghostwriter will soon be able to know your entire history of deploying to your app, and see all of the logs from users using it in the wild. Because it sees the entire process, it will be able to make coding suggestions for you to build features or fix bugs based on how your users are using your app.

So vertical integration becomes a superpower for us. It’s technically possible for Microsoft to build that, but it’s extremely hard because their customers aren’t specifically asking for that. They’re not asking for bundling and vertical integration, most of them want less bundling and more customization.

There are other good examples of this. One is Tesla.

Yesterday, I was in San Francisco and I wanted to drive back to South Bay where we live. And I put in our address, and I didn’t have enough battery to get there. The Tesla automatically added the address of a Supercharger station that’s on our way home, and it used self-driving to get me there.

The vertical integration of the car, the AI, and the physical power station created this incredible experience. It allows Tesla to suck at a lot of things – like build quality issues – but because they can provide an unparalleled end-to-end experience they win.

This type of bundling and vertical integration is something I’d recommend startups who want to build in AI think about as a strategy. It takes a certain type of founder who is very focused and able to do it for a really long time in order to build something like this.

It’s super hard. It will nearly kill you. And you can’t go for the vertical integration and bundling right out of the gate. There were a lot of startups founded around the time of Replit that tried to do this, and they all died. A few of them raised big seed rounds, and burned through it for five years, and closed the company.

You can’t get too ambitious too fast. What we did is we built a toy version of the complete thing.

When we first started the company I expected the product would be powerful enough within a year that we could code Replit with Replit. But we just started getting to the point where that’s possible—so it took a few years.

At first it was very useful for students. So, it was a toy for the lower end of the market, and then you keep making it better and more complete over time.

Building a toy is helpful because it allows you to ship. You create progress for people like investors or talent to see, and it allows you to tell if you’re building something people find useful. Whereas if you’re hiding in a corner somewhere, even if you have infinite capital, it’s really hard to have the willpower to keep going.

This stuff is important, but I don’t think founders should over-strategize. Just build something useful and get it into the hands of people. You want to think strategically, but don’t do it so early on that the magnitude of the challenge depresses you.

A book recommendation

This book has come as close as any other book in history to predict the massive change the information revolution will have. It predicted many things we're just experiencing—from AI lawyers to Bitcoin

Find Out What

Comes Next in Tech.

Start your free trial.

New ideas to help you build the future—in your inbox, every day. Trusted by over 75,000 readers.

SubscribeAlready have an account? Sign in

What's included?

-

Unlimited access to our daily essays by Dan Shipper, Evan Armstrong, and a roster of the best tech writers on the internet

-

Full access to an archive of hundreds of in-depth articles

-

-

Priority access and subscriber-only discounts to courses, events, and more

-

Ad-free experience

-

Access to our Discord community

Thanks to our Sponsor: Viable

Thanks again to our sponsor Viable, the Generative Analysis platform that saves hundreds of hours of analysis. Say goodbye to manual data crunching and hello to lightning-fast, AI-powered insights from your unstructured data.

Comments

Don't have an account? Sign up!